October 28th, 2012

XML will be celebrating its official 15th anniversary on February 10, 2013.

By many measures it has been a huge success. There are thousands of XML dialects used across a vast swath of the sciences, in business and in ecommerce.

By the measure of the book publishing community it’s been less than a huge success.

How much less is a subject for conjecture: I can’t find anything resembling usable data on XML adoption in the book publishing community.

Part of the challenge of measuring XML adoption in book publishing is that there is no single book publishing community. It’s broken up into several groups. Even the divisions can be divisive. But let’s just try some groupings, roughly by increasing complexity and variety of page design.

1. Trade publishing (including children’s although page design is far different for this group)

2. Educational publishing (including both K-12 and higher education although page design is far different for each segment)

3. STM = Scientific, Technical and Medical (including reference, and journals)

XML’s proponents, a diverse group of wise and practical men and woman, mostly believed that XML was suited to all forms of book publishing. I was among those proponents.

But it didn’t happen.

The degree of XML adoption appears to be roughly inverse to the order above. In other words, STM has a very high degree of XML usage, educational perhaps 50/50, and trade publishing something less than 5%. That means XML has met mixed results in a group that appeared to be a real natural: educational publishing, particularly higher ed. And XML has failed altogether for trade publishers (with only a handful of exceptions).

I want to understand why.

I think the answer is straightforward. XML is tremendously powerful but it is far too complex. Every effort made to simplify XML workflows has failed to make them simple enough for most editorial and production workers beneath the STM level. And the benefits have been insufficient for management to make the investment to forcibly train the required personnel.

My research on XML comprises over 550 files and some 600 megabytes of data. Plenty. The first file dates back to 1999 when I made notes at an Adobe briefing in San Jose. John Warnock, then Adobe’s president, said that “there is a broad misunderstanding that XML and PDF compete. We do not view XML as a competitor to PDF, but plan to incorporate XML encoding in PDF, as in most Adobe products.”

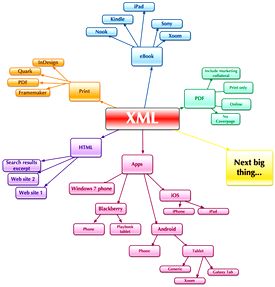

A Google search on “Adobe and XML” today reveals that there is significant support for XML in Adobe Acrobat, Adobe InDesign and Adobe FrameMaker. In none of those products is the support complete. I guess it’s much better than nothing: people manage XML workflows using each product. But the inconsistency of Adobe’s approach to XML is to me indicative of the broader XML problem. When it comes to the mainstream of publishing the use case is muddled. The best tools for XML publishing are specialized and out of the mainstream of authoring and production.

Indicative also of the XML mess is Microsoft’s very inconsistent history with XML support. Microsoft Office 2007 was at one point intended as a showcase for XML in the mainstream of document publishing. This was to have been a very big deal for XML. It failed slowly and painfully. It’s unusual to hear the letters X & M & L mentioned in sequence at Microsoft today.

What To Do Now?

I see three kinds of XML people in publishing today:

1. Those who have mastered XML to their satisfaction and use it productively.

2. Those who are still struggling to make XML work.

3. Those who gave up, along with the majority who never bothered trying to make XML work.

Once again, I wish I had some hard numbers but they’re lacking.

My sense is that we’re looking at 4/1/95. In other words, out of every 100 publishing professionals, 4 have got XML helping with their workflows (in many cases not a “full” or “robust” XML workflow, but it does the trick).

1 in 100 is stuck in that painful purgatory where they’re trying to make a go of XML, either because they think it will help them, or because they’ve been ordered to do so.

And the 95% sleep soundly every night never dreaming of embedded elements. God bless ’em.

Where Do We Go Next?

It’s my conviction that the benefits of an XML workflow are so enormous and so compelling that we have to find a way to make it work.

I’m going to be co-presenting a webinar for Aptara on November 8th. It’s a bit of a wolf in sheep’s clothing. We’re calling it A Roadmap to Efficiently Producing Multi-Format/Multi-Screen eBooks (link broken), but it’s secretly about XML and publishing. Because, after all, how else are you going to efficiently produce content for multiple formats without XML?

XML can be simplified.

People try to simplify it today by adopting a subset of a full XML workflow. This is a good solution that works well for some. But it doesn’t address XML’s fundamental complexity.

We’ve come a long way since Tim Bray, Jean Paoli and C. M. Sperberg-McQueen turned the Extensible Markup Language (XML) 1.0 into a W3C recommendation.

It’s time to develop ABCML, the ABC of markup languages. Who’s with me?

Some Background

I list here a series of resources for those interested in XML, broken down by topic.

A. Introductions to XML

I’ve chosen a handful of the most simplified introductions to XML that I’ve found on the web (and one book). Those of you who are new to XML will quickly appreciate its complexity when you attempt to digest these simplified explanations.

1. A Gentle Introduction to XML: Updated in 2019.

3. An Introduction to XML Basics: Steven Holzner’s 2003 guide on the Peachpit Press site.

4. A Designer’s Guide to Adobe InDesign and XML: This 2008 book by James Maivald and Cathy Palmer is extremely well written. It’s specifically aimed at designers, which is to say that it’s written in a language that a designer can (at least potentially) understand. So it’s as good as it gets, but I think you’ll still find it challenging. This is the acid test of whether XML can be made safe for families with small children.

5. Work with XML: Adobe’s explanation of the intersection of Adobe InDesign with XML.

B: XML as a Failed State

1. XML Can Go to H***: One Designer’s Experience with the “Future of Publishing” (2004): According to Susan Glinert, who bears XML battle scars, the future is not bright.

2. The Truth about XML (2003): Systems powered by XML might someday prove to be the standard for information sharing between businesses, but not in the near future.

3. What will it take to get (end user) XML editors that people will use? (2011): Norman Walsh is a prominent proponent of XML, but here looks at some of the challenges surrounding the tools and user expectations.

4. XML Fever (2008): An in-depth piece from Erik Wilde and Robert J. Glushko, later published on ACM, “This article is about the lessons gleaned from learning XML, from teaching XML, from dealing with overly optimistic assumptions about XML’s powers, and from helping XML users in the real world recover from these misconceptions.”