August 4th, 2024

(Note, after publication: The headline of this post is meant to be ironic, but I can see it doesn’t parse that way.)

There are two separate, though related, camps of AI naysayers. One camp accepts that the latest AI technology is indeed powerful, and that its tremendous power is at the heart of the AI threat. The other camp thinks that generative AI is BS, that it’s fundamentally and fatally flawed technology. And, they believe, the emperor’s lack of clothing will soon be revealed for all to behold.

In the midst of these two camps, it can be uncomfortable to be a techno-optimist, which I decidedly am. I’m blown away by what I see enabled by generative AI. And I keep seeing more, not less.

In my new book I look at the many threats from generative AI and then comment that “the concerns around AI are serious. The risks are real. Sometimes they are expressed in hysterical ways, but, when you drill down, the impact of AI has the potential to be enormously destructive.”

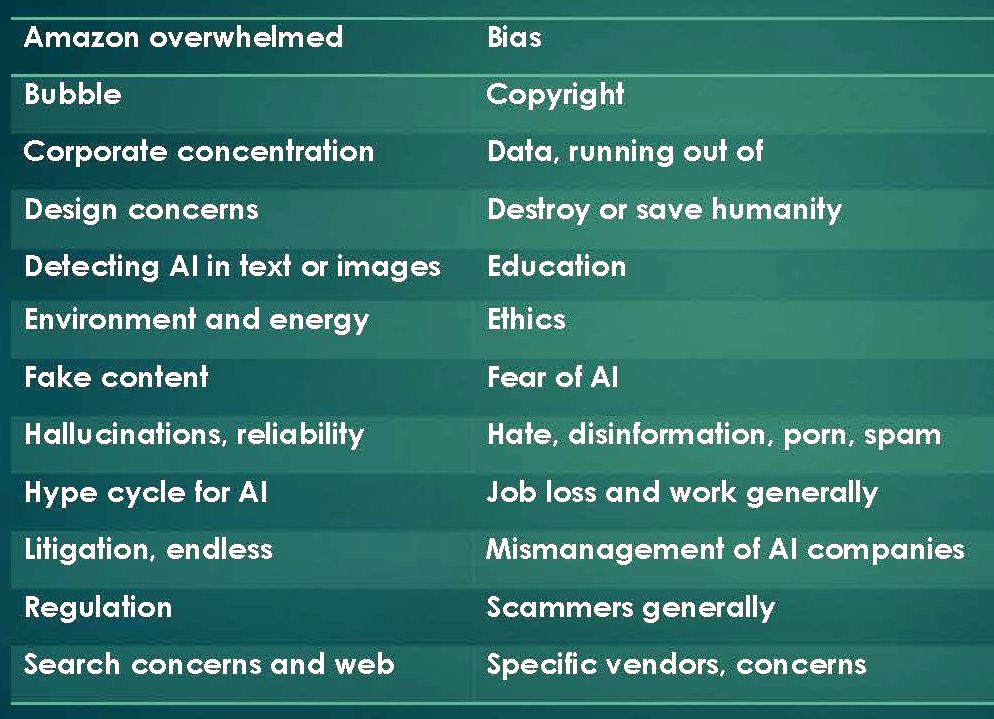

Separately I made a chart of some of the threats I’ve been tracking. The list is indeed a long one.

I note in the book that “it’s possible you’ll conclude that the risks outweigh the benefits, and that you don’t want to pursue the use of AI, whether personally or within your organization.” I argue that the decision is a personal choice, not one that should be dictated by the technorati.

But it’s not these threats that I want to consider in this post. It’s the notion that the whole damn thing is about to come crashing down.

Beyond the threat brigade is a group that argues that the science and technology within generative AI is fundamentally defective. And, if that’s the case, the business model collapses.

There are several reasons proffered. One is that AI is running out of data, out of fresh text-based content that it can sink its jagged teeth into. Another is that the hallucination problem — making stuff up — is endemic to the technology, and cannot be solved. If it can’t be solved, AI engines will never be reliable; they cannot be counted on. And if they can’t be counted on, they’ll never be widely adopted.

Alongside these issues, there’s a belief that AI companies will have to start paying for the content they ingest, not with just a few select licensing deals, and they’ll never be able to afford the cost of licensing the whole damn thing (online, and beyond, i.e. books and periodicals). The AI engines always need fresh blood content, and content owners are on to them — a recent report suggests that over 35% of the world’s top 1000 websites are now blocking OpenAI’s GPTBot web crawler. They’re driving the AI companies into a copyright corner.

The final proof, for several of the naysayers, is this past week’s financial news. Different journalists slice it differently. But, as explained by Carmen Reinicke at Bloomberg, earnings growth at many of the big AI-connected companies “has slowed to nearly 30% in the second quarter, down from 50% in the prior period.” That’s growth in earnings, not the earnings themselves — the companies are still growing by leaps and bounds. But, apparently, investors have priced further madcap growth into the share prices. If AI fails to deliver on its promise, and hyperbolic growth can’t be sustained, then the whole thing will collapse.

Take a look at some of the other headlines from this past week:

- Why the collapse of the Generative AI bubble may be imminent – Gary Marcus

- Wall Street’s $2 Trillion AI Reckoning — Kevin T. Dugan, NYMag

- Nvidia is in a bubble and the AI theme is “overhyped” — Elliott Management

- Big Tech’s AI Promises Become a ‘Show Me’ Story For Investors — Bloomberg

- Is Big Tech’s AI Starting To Run Out of “Other People’s Money” — Mind Matters

- Sam Altman is becoming one of the most powerful people on Earth. We should be very afraid —Gary Marcus, The Guardian

Gary Marcus is a one-man wrecking band for generative AI. He blogged his disdain four times in the week between July 24 and July 31. The thing with Marcus is that he has tremendous credibility; his resume is impeccable. He’s got a Ph.D. from MIT, mentored by Steven Pinker, and was part of several AI-connected startups. He has an intimate understanding of the science.

But gosh is he angry. Yesterday he opined in his newsletter: “I just wrote a hard-hitting essay for WIRED predicting that the AI bubble will collapse in 2025 — and now I wish I hadn’t. Clearly, I got the year wrong. It’s going to be days or weeks from now, not months.”

The collapse, he says, “will be financial.”

“VCs will radically pull back on funding for AI startups before the end of the year,” he writes, “and many AI startups will struggle to get their next round of funding.” He seems to be now enthusiastic about the long-term prospects for the science, “but the short term is likely going to be rough. Especially on those investors and pension funds that bet big on AI startups that subsisted on hype and had little revenue.”

He’s just as hard on Sam Altman, OpenAI’s CEO. In his Guardian article he suggests that he, the U.S. Senate, “and ultimately the American people,” had probably “been played (by Altman).” Marcus intimates that Altman lied in his Senate testimony (“wasn’t telling the full truth”). He quotes journalist Ed Zitron, who wrote a scathing critique of Altman in June, calling Altman “a seedy grifter,” “a master manipulator,” “a monument to the rot at the core of (Silicon) valley,” “a specious opportunist and manipulator,” and more.

As off-putting as his vituperation can be, with Marcus’s credentials, it’s impossible to just dismiss his arguments. But a collapse “days or weeks from now”? What’s an AI booster to do?

I think I’ll stay the course for now.

Stay tuned.

PS:

It’s been a crazy Monday, August 5, 2024. The stock market took a nose-dive. The Economist suggests that’s because of “fears of a global recession and concerns that hopes for artificial intelligence had been unrealistically high.”

Google was convicted as a monopolist, in a 277-page ruling. Of course it will appeal. Good luck with that.

Gary Marcus, discussed above, emitted two newsletters today, here and here. The second references breaking news (as of 5:31 pm PDT), that John Schulman (an OpenAI co-founder), has left the company for AI rival Anthropic. Greg Brockman, OpenAI’s president, and Peter Deng, a “product leader,” also departed. Brockman was an Altman stalwart — he stuck by him during the November 2023 putsch. and so his departure, for “a sabbatical,” has extra weight.

Lots of others are weighing in. The always interesting Ted Gioia wrote yesterday that we can “Expect Heavy Turbulence for the Next 5 Months.”

August 19, 2024. The stock market did what it often does: freaked out (on August 5), then got freaked out that it had freaked out, and freaking recovered.

The AI-connected tech stocks are still down from their summer peaks, but have grabbed back much of what was lost.

The Economist today posted an article “Artificial intelligence is losing hype,” paywall-protected (but no doubt available at your public library). The article is mostly a drill-down on something called the “Hype Cycle for Artificial Intelligence,” put out by a Gartner, a well-established technology analyis and consulting company (“15,000 employees located in over 100 offices worldwide”). The cycle follows predictible patterns, from the “Peak of Inflated Expectations” to the “Trough of Disillusionment” (which is where some argue generative AI has landed). But redemption follows, as the technology moves through the “Slope of Enlightenment” toward the promised land, the “Plateau of Productivity.”

But The Economist delves into the record and finds that “of all the forms of tech which fall into the trough of disillusionment, six in ten do not rise again.” Will generative AI be one of the forty percent that do?